UDP Port Exhaustion Azure NVA

Recently we discovered some performance issues with DNS resolving on a production network. This network is using a third party NVA cluster for routing and firewalling. To enable the most seamless failover (without having to rewrite Azure Routing Tables), this cluster is setup with two Azure Standard Load Balancers: One internal and one external.

So far so good. This cluster has been operational for a while, but sometimes there were some random performance related issues, mostly around monitoring solutions. The firewall logging however never indicated any issues and the problem was also not very reproducible. Until someone figured out that ever so occaissional DNS resolution failed.

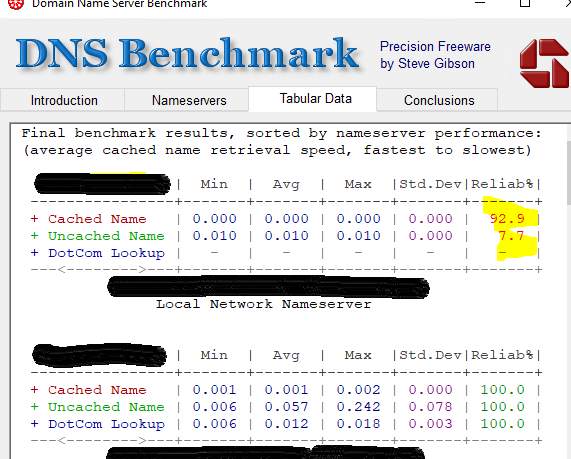

To further test this and to confirm DNS resolution was indeed unreliable, a colleague did some performance testing using DNSBench and indeed, especially when running several benchmark runs in a row, the problem was reproducible.

In the picture the first result is from the failing server, which was using CloudFlare (1.1.1.1 / 1.0.0.1) as forwarder. The second results are from another DNS server which was using Azure DNS as forwarder (which goes directly and does not use the NVA Cluster). The exact result varied a bit. Sometimes it would not connect at all, sometimes it would behave almost normal, especially the first 1-3 runs of the benchmark tool. But eventually it would start failing.

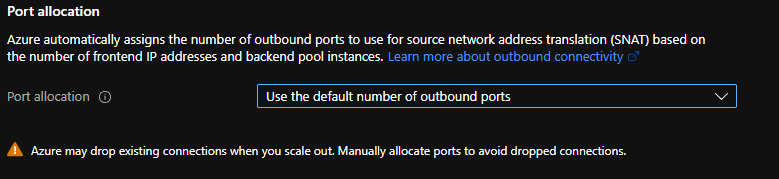

Not understanding what the problem was, I created a support request with the NVA supplier. They made some suggestions for changes that could be tried, but non of it would make a difference. While observing the behaviour in the firewall I noticed that when I was really pushing the benchmark, I started seeing one sided UDP ‘connections’ (for lack of a better word, but packets gone out that the firewall did not yet get a reply on). So I started wondering if the problem may be related to the outbound connection going over the Azure Standard Load Balancer. When I configured originally there was no support for the outbound rule in the Azure portal, so this was done by PowerShell. But when I checked the portal I noticed this:

So I checked the link to see how these default number of ports were configured. And this is were I saw the problem: The default (for a 1-50 VM pool size) is 1024 ports. That’s not a lot :pensive:

Considering there will ever only be 2 VMs in this backend pool, I changed from the default to a manually configured 31944 ports (the maximum value). So far, after making this change, the problem has not been reproducible!

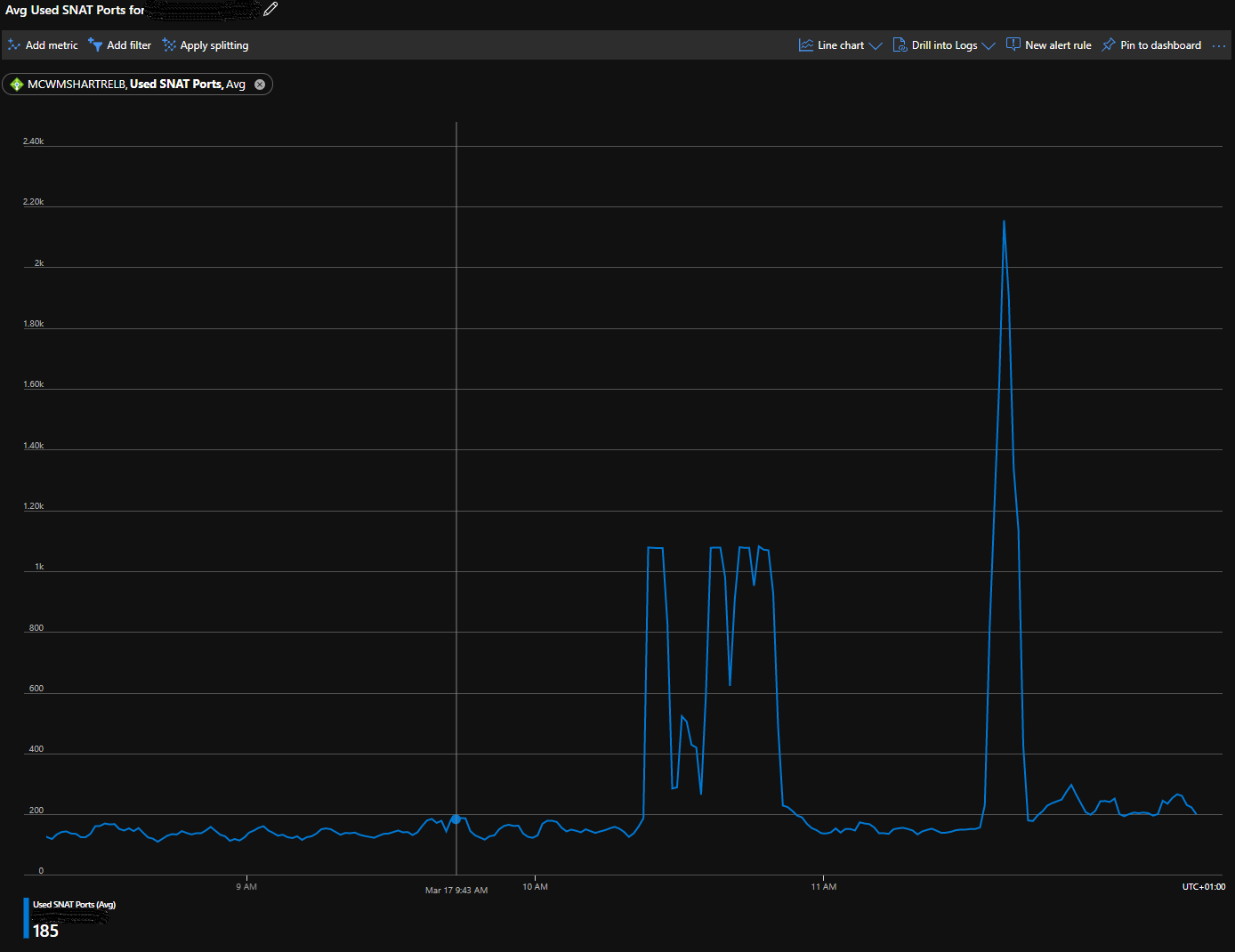

Looking in the metric for the load balancer, for open SNAT ports, you can also see how before the change there is a hard limit at 1024:

Lesson to be learned: When using an Azure Standard Load Balancer in combination with outbound rules, make sure to check that you configure a suitable number of SNAT ports!